This Week in Security: XRP Poisoned, MCP Bypassed, and More

Researchers at Aikido run the Aikido Intel system, an LLM security monitor that ingests the feeds from public package repositories, and looks for anything unusual. In this case, the unusual activity was five rapid-fire releases of the xrpl package on NPM. That package is the XRP Ledger SDK from Ripple, used to manage keys and build crypto wallets. While quick point releases happen to the best of developers, these were odd, in that there were no matching releases in the source GitHub repository. What changed in the first of those fresh releases?

The most obvious change is the checkValidityOfSeed() function added to index.ts. That function takes a string, and sends a request to a rather odd URL, using the supplied string as the ad-referral header for the HTML request. The name of the function is intended to blend in, but knowing that the string parameter is sent to a remote web server is terrifying. The seed is usually the root of trust for an individual’s cryptocurrency wallet. Looking at the actual usage of the function confirms, that this code is stealing credentials and keys.

The releases were made by a Ripple developer’s account. It’s not clear exactly how the attack happened, though credential compromise of some sort is the most likely explanation. Each of those five releases added another bit of malicious code, demonstrating that there was someone with hands on keyboard, watching what data was coming in.

The good news is that the malicious releases only managed a total of 452 downloads for the few hours they were available. A legitimate update to the library, version 4.2.5, has been released. If you’re one of the unfortunate 452 downloads, it’s time to do an audit, and rotate the possibly affected keys.

Zyxel FLEX

More specifically, we’re talking about Zyxel’s USG FLEX H series of firewall/routers. This is Zyxel’s new Arm64 platform, running a Linux system they call Zyxel uOS. This series is for even higher data throughput, and given that it’s a new platform, there are some interesting security bugs to find, as discovered by [Marco Ivaldi] of hn Security and [Alessandro Sgreccia] at 0xdeadc0de. Together they discovered an exploit chain that allows an authenticated user with VPN access only to perform a complete device takeover, with root shell access.

The first bug is a wild one, and is definitely something for us Linux sysadmins to be aware of. How do you handle a user on a Linux system, that you don’t want to have SSH access to the system shell? I’ve faced this problem when a customer needed SFTP access to a web site, but definitely didn’t need to run bash commands on the server. The solution is to set the user’s shell to nologin, so when SSH connects and runs the shell, it prints a message, and ends the shell, terminating the SSH connection. Based on the code snippet, the FLEX is doing something similar, perhaps with -false set as the shell instead:

$ ssh user@192.168.169.1

(user@192.168.169.1) Password:

-false: unknown program '-false'

Try '-false --help' for more information.

Connection to 192.168.169.1 closed.

It’s slightly janky, but seems set up correctly, right? There’s one more step to do this completely: Add a Match entry to sshd_config, and disable some of the other SSH features you may not have thought about, like X11 forwarding, and TCP forwarding. This is the part that Zyxel forgot about. VPN-only users can successfully connect over SSH, and the connection terminates right away with the invalid shell, but in that brief moment, TCP traffic forwarding is enabled. This is an unintended security domain transverse, as it allows the SSH user to redirect traffic into internal-only ports.

Next question to ask, is there any service running inside the appliance that provides a pivot point? How about PostgreSQL? This service is set up to allow local connections on port 5432 — without a password. And PostgreSQL has a wonderful feature, allowing a COPY FROM command to specify a function to run using the system shell. It’s essentially arbitrary shell execution as a feature, but limited to the PostgreSQL user. It’s easy enough to launch a reverse shell to have ongoing shell access, but still limited to the PostgreSQL user account.

There are a couple directions exploitation can go from there. The /tmp/webcgi.log file is accessible, which allows for grabbing an access token from a logged-in admin. But there’s an even better approach, in that the unprivileged user can use the system’s Recovery Manager to download system settings, repack the resulting zip with a custom binary, re-upload the zip using Recovery Manager, and then interact with the uploaded files. A clever trick is to compile a custom binary that uses the setuid(0) system call, and because Recovery Manager writes it out as root, with the setuid bit set, it allows any user to execute it and jump straight to root. Impressive.

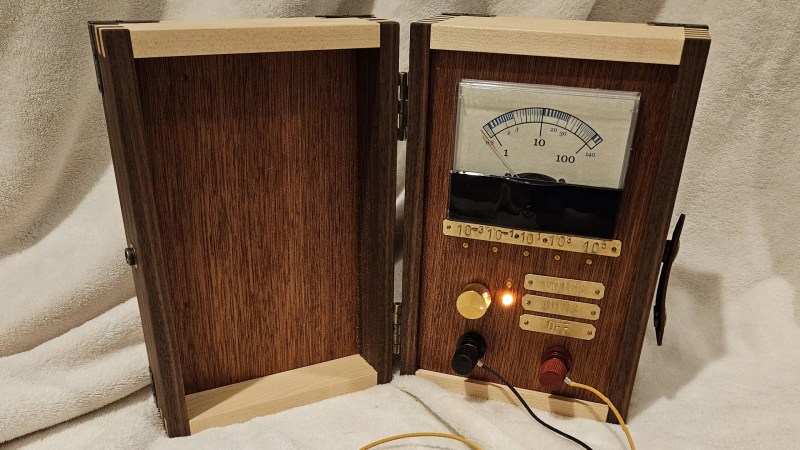

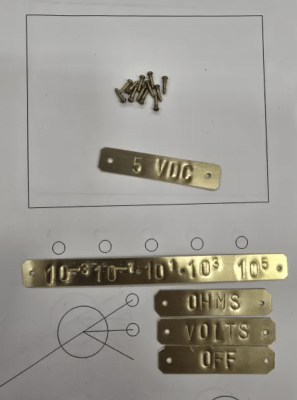

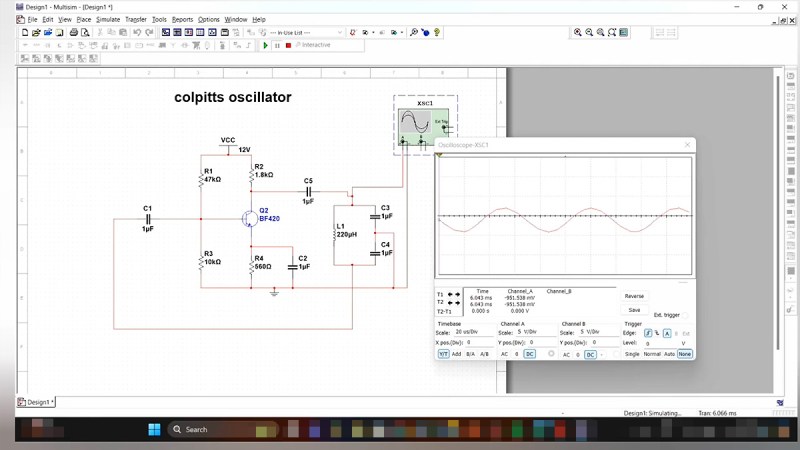

Power Glitching an STM32

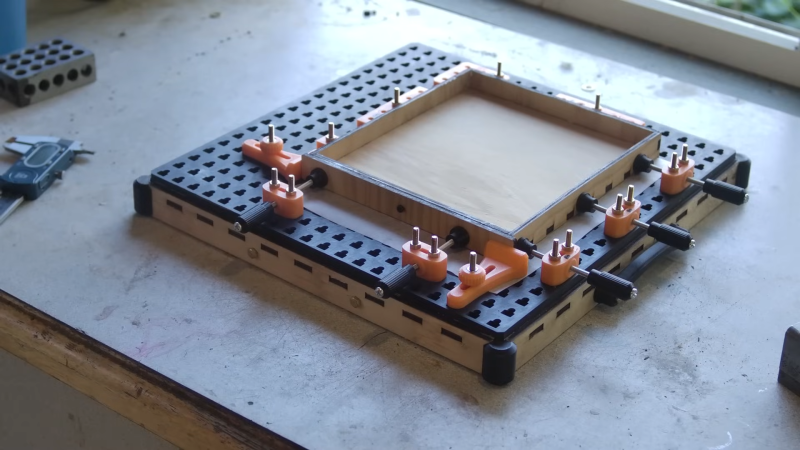

Micro-controllers have a bit of a weird set of conflicting requirements. They need to be easily flashed, and easily debugged for development work. But once deployed, those same chips often need to be hardened against reading flash and memory contents. Chips like the STM32 series from ST Microelectronics have multiple settings to keep chip contents secure. And Anvil Secure has some research on how some of those protections could be defeated. Power Glitching.

The basic explanation is that these chips are only guaranteed to work when run inside their specified operating conditions. If the supply voltage is too low, be prepared for unforeseen consequences. Anvil tried this, and memory reads were indeed garbled. This is promising, as the memory protection settings are read from system memory during the boot process. In fact, one of the hardest challenges to this hack was determining the exact timing needed to glitch the right memory read. Once that was nailed down, it took about 6 hours of attempts and troubleshooting to actually put the embedded system into a state where firmware could be extracted.

MCP Line Jumping

Trail of Bits is starting a series on MCP security. This has echoes of the latest FLOSS Weekly episode, talking about agentic AI and how Model Context Protocol (MCP) is giving LLMs access to tools to interact with the outside world. The security issue covered in this first entry is Line Jumping, also known as tool poisoning.

It all boils down to the fact that MCPs advertise the tools that they make available. When an LLM client connects to that MCP, it ingests that description, to know how to use the tool. That description is an opportunity for prompt injection, one of the outstanding problems with LLMs.

Bits and Bytes

Korean SK Telecom has been hacked, though not much information is available yet. One of the notable statements is that SK Telecom is offering customers a free SIM swapping protection service, which implies that a customer database was captured, that could be used for SIM swapping attacks.

WatchTowr is back with a simple pre-auth RCE in Commvault using a malicious zip upload. It’s a familiar story, where an unauthenticated endpoint can trigger a file download from a remote server, and file traversal bugs allow unzipping it in an arbitrary location. Easy win.

SSD Disclosure has discovered a pair of Use After Free bugs in Google Chrome, and Chrome’s Miracleptr prevents them from becoming actual exploits. That technology is a object reference count, and “quarantining” deleted objects that still show active references. And for these particular bugs, it worked to prevent exploitation.

And finally, [Rohan] believes there’s an argument to be made, that the simplicity of ChaCha20 makes it a better choice as a symmetric encryption primitive than the venerable AES. Both are very well understood and vetted encryption standards, and ChaCha20 even manages to do it with better performance and efficiency. Is it time to hang up AES and embrace ChaCha20?