Mining and Refining: Lead, Silver, and Zinc

If you are in need of a lesson on just how much things have changed in the last 60 years, an anecdote from my childhood might suffice. My grandfather was a junk man, augmenting the income from his regular job by collecting scrap metal and selling it to metal recyclers. He knew the current scrap value of every common metal, and his garage and yard were stuffed with barrels of steel shavings, old brake drums and rotors, and miles of copper wire.

But his most valuable scrap was lead, specifically the weights used to balance car wheels, which he’d buy as waste from tire shops. The weights had spring steel clips that had to be removed before the scrap dealers would take them, which my grandfather did by melting them in a big cauldron over a propane burner in the garage. I clearly remember hanging out with him during his “melts,” fascinated by the flames and simmering pools of molten lead, completely unconcerned by the potential danger of the situation.

Fast forward a few too many decades and in an ironic twist I find myself living very close to the place where all that lead probably came from, a place that was also blissfully unconcerned by the toxic consequences of pulling this valuable industrial metal from tunnels burrowed deep into the Bitterroot Mountains. It didn’t help that the lead-bearing ores also happened to be especially rich in other metals including zinc and copper. But the real prize was silver, present in such abundance that the most productive silver mine in the world was once located in a place that is known as “Silver Valley” to this day. Together, these three metals made fortunes for North Idaho, with unfortunate side effects from the mining and refining processes used to win them from the mountains.

All Together Now

Thanks to the relative abundance of their ores and their physical and chemical properties, lead, silver, and zinc have been known and worked since prehistoric times. Lead, in fact, may have been the first metal our ancestors learned to smelt. It’s primarily the low melting points of these metals that made this possible; lead, for instance, melts at only 327°C, well within the range of a simple wood fire. It’s also soft and ductile, making it easy enough to work with simple tools that lead beads and wires dating back over 9,000 years have been found.

Unlike many industrial metals, minerals containing lead, silver, and zinc generally aren’t oxides of the metals. Rather, these three metals are far more likely to combine with sulfur, so their ores are mostly sulfide minerals. For lead, the primary ore is galena or lead (II) sulfide (PbS). Galena is a naturally occurring semiconductor, crystals of which lent their name to the early “crystal radios” which used a lump of galena probed with a fine cat’s whisker as a rectifier or detector for AM radio signals.

Geologically, galena is found in veins within various metamorphic rocks, and in association with a wide variety of sulfide minerals. Exactly what minerals those are depends greatly on the conditions under which the rock formed. Galena crystallized out of low-temperature geological processes is likely to be found in limestone deposits alongside other sulfide minerals such as sphalerite, or zincblende, an ore of zinc. When galena forms under higher temperatures, such as those associated with geothermal processes, it’s more likely to be associated with iron sulfides like pyrite, or Fool’s Gold. Hydrothermal galenas are also more likely to have silver dissolved into the mineral, classifying them as argentiferous ores. In some cases, such as the mines of the Silver Valley, the silver is at high enough concentrations that the lead is considered the byproduct rather than the primary product, despite galena not being a primary ore of silver.

Like a Lead Bubble

How galena is extracted and refined depends on where the deposits are found. In some places, galena deposits are close enough to the surface that open-cast mining techniques can be used. In the Silver Valley, though, and in other locations in North America with commercially significant galena deposits, galena deposits follow deep fissures left by geothermal processes, making deep tunnel mining more likely to be used. The scale of some of the mines in the Silver Valley is hard to grasp. The galena deposits that led to the Bunker Hill stake in the 1880s were found at an elevation of 3,600′ (1,100 meters) above sea level; the shafts and workings of the Bunker Hill Mine are now 1,600′ (488 meters) below sea level, requiring miners to take an elevator ride one mile straight down to get to work.

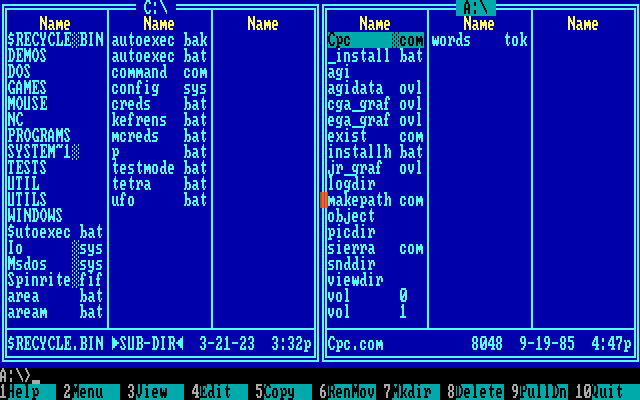

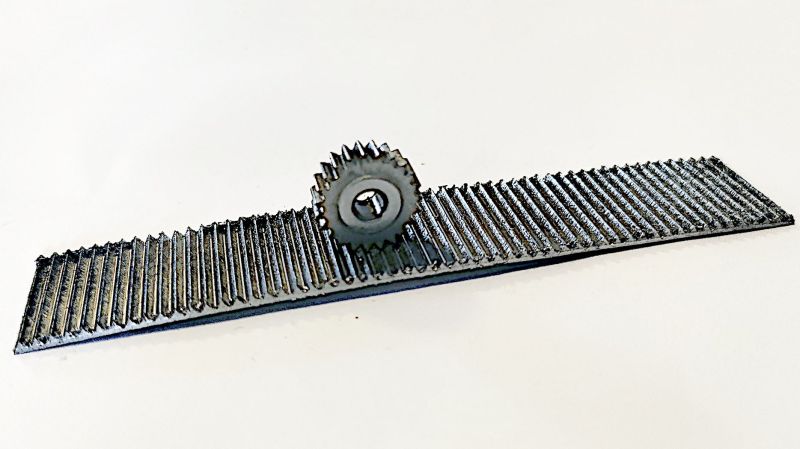

Ore veins are followed into the rock using a series of tunnels or stopes that branch out from vertical shafts. Stopes are cut with the time-honored combination of drilling and blasting, freeing up hundreds of tons of ore with each blasting operation. Loose ore is gathered with a slusher, a bucket attached to a dragline that pulls ore back up the stope, or using mining loaders, low-slung payloaders specialized for operation in tight spaces.

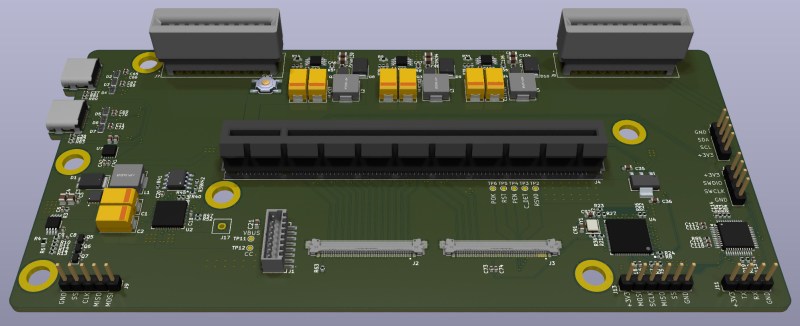

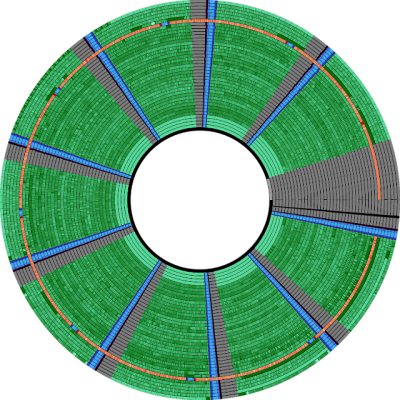

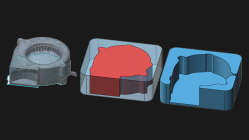

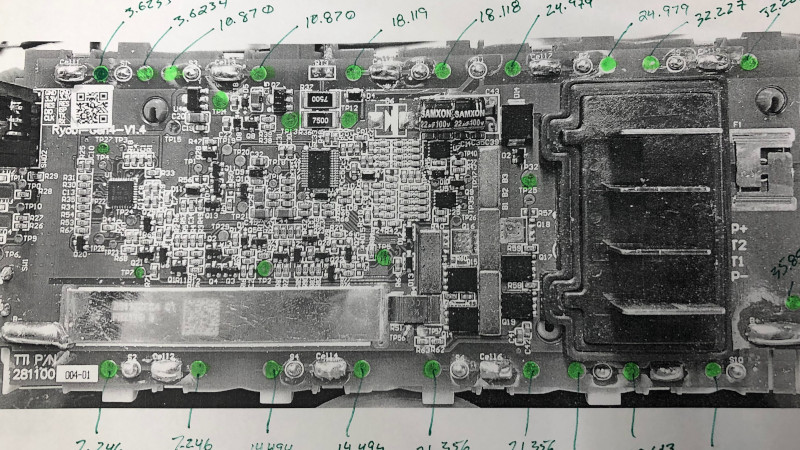

Silver Valley galena typically assays at about 10% lead, making it a fairly rich ore. It’s still not rich enough, though, and needs to be concentrated before smelting. Most mines do the initial concentration on site, starting with the usual crushing, classifying, washing, and grinding steps. Ball mills are used to reduce the ore to a fine powder, mixed with water and surfactants to form a slurry, and pumped into a broad, shallow tank. Air pumped into the bottom of the tanks creates bubbles in the slurry that carry the fine lead particles up to the surface while letting the waste rock particles, or gangue, sink to the bottom. It seems counterintuitive to separate lead by floating it, but froth flotation is quite common in metal refining; we’ve seen it used to concentrate everything from lightweight graphite to ultradense uranium. It’s also important to note that this is not yet elemental lead, but rather still the lead sulfide that made up the bulk of the galena ore.

Once the froth is skimmed off and dried, it’s about 80% pure lead sulfide and ready for smelting. The Bunker Hill Mine used to have the largest lead smelter in the world, but that closed in 1982 after decades of operation that left an environmental and public health catastrophe in its wake. Now, concentrate is mainly sent to smelters located overseas for final processing, which begins with roasting the lead sulfide in a blast of hot air. This converts the lead sulfide to lead oxide and gaseous sulfur dioxide as a waste product:

After roasting, the lead oxide undergoes a reduction reaction to free up the elemental lead by adding everything to a blast furnace fueled with coke:

Any remaining impurities float to the top of the batch while the molten lead is tapped off from the bottom of the furnace.

Zinc!

A significant amount of zinc is also located in the ore veins of the Silver Valey, enough to become a major contributor to the district’s riches. The mineral sphalerite is the main zinc ore found in this region; like galena, it’s a sulfide mineral, but it’s a mixture of zinc sulfide and iron sulfide instead of the more-or-less pure lead oxide in galena. Sphalerite also tends to be relatively rich in industrially important contaminants like cadmium, gallium, germanium, and indium.

Extraction of sphalerite occurs alongside galena extraction and uses mostly the same mining processes. Concentration also uses the froth flotation method used to isolate lead sulfide, albeit with different surfactants specific for zinc sulfide. Concentration yields a material with about 50% zinc by weight, with iron, sulfur, silicates, and trace metals making up the rest.

Purification of zinc from the concentrate is via a roasting process similar to that used for lead, and results in zinc oxide and more sulfur dioxide:

Originally, the Bunker Hill smelter just vented the sulfur dioxide out into the atmosphere, resulting in massive environmental damage in the Silver Valley. My neighbor relates his arrival in Idaho in 1970, crossing over the Lookout Pass from Montana on the then brand-new Interstate 90. Descending into the Silver Valley was like “a scene from Dante’s Inferno,” with thick smoke billowing from the smelter’s towering smokestacks trapped in the valley by a persistent inversion. The pine trees on the hillsides had all been stripped of needles by the sulfuric acid created when the sulfur dioxide mixed with moisture in the stale air. Eventually, the company realized that sulfur was too valuable to waste and started capturing it, and even built a fertilizer plant to put it to use. But the damage was done, and it took decades for the area to bounce back.

Recovering metallic zinc from zinc oxide is performed by reduction, again in a coke-fired blast furnace which collects the zinc vapors and condenses them to the liquid phase, which is tapped off into molds to create ingots. An alternative is electrowinning, where zinc oxide is converted to zinc sulfate using sulfuric acid, often made from the sulfur recovered from roasting. The zinc sulfate solution is then electrolyzed, and metallic zinc is recovered from the cathodes, melted, further purified if necessary, and cast into ingots.

Silver from Lead

If the original ore was argentiferous, as most of the Silver Valley’s galena is, now’s the time to recover the silver through the Parke’s process, a solvent extraction technique. In this case, the solvent is the molten lead, in which silver is quite soluble. The dissolved silver is precipitated by adding molten zinc, which has the useful property of reacting with silver while being immiscible with lead. Zinc also has a higher melting point than lead, meaning that as the temperature of the mixture drops, the zinc solidifies, carrying along any silver it combined with while in the molten state. The zinc-silver particles float to the top of the desilvered lead where they can be skimmed off. The zinc, which has a lower boiling point than silver, is driven off by vaporization, leaving behind relatively pure silver.

To further purify the recovered silver, cupellation is often employed. Cupellation is a pyrometallurgical process used since antiquity to purify noble metals by exploiting the different melting points and chemical properties of metals. In this case, silver contaminated with zinc is heated to the point where the zinc oxidizes in a shallow, porous vessel called a cupel. Cupels were traditionally made from bone ash or other materials rich in calcium carbonate, which gradually absorbs the zinc oxide, leaving behind a button of purified silver. Cupellation can also be used to purify silver directly from argentiferous galena ore, by differentially absorbing lead oxide from the molten solution, with the obvious disadvantage of wasting the lead:

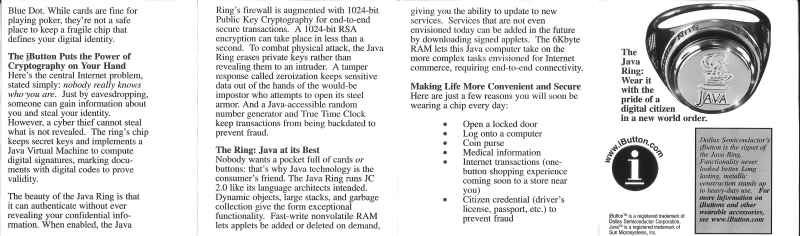

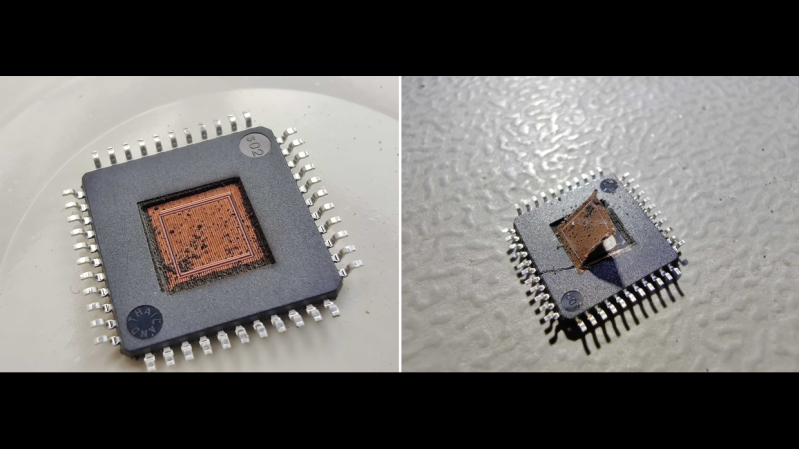

Cupellation can also be used to recover small amounts of silver directly from refined lead, such as that in wheel weights:

If my grandfather had only known.