USB Stick Hides Large Language Model

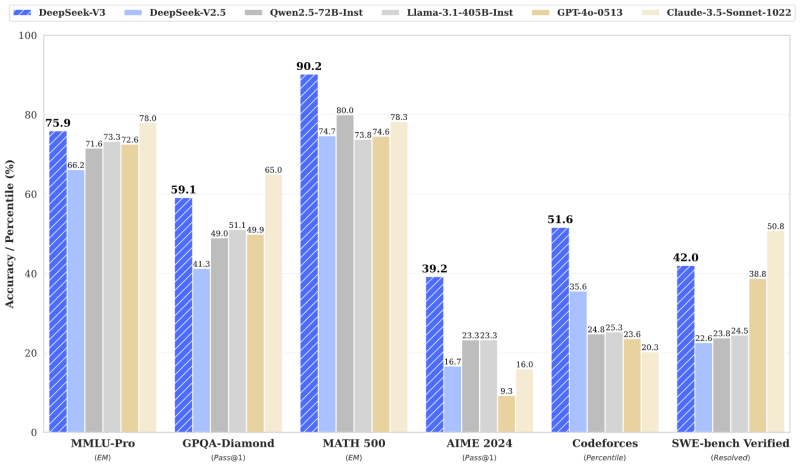

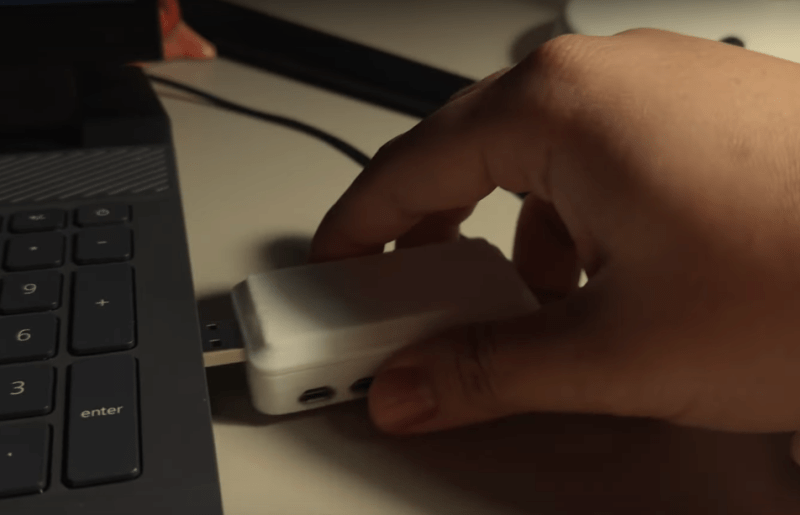

Large language models (LLMs) are all the rage in the generative AI world these days, with the truly large ones like GPT, LLaMA, and others using tens or even hundreds of billions of parameters to churn out their text-based responses. These typically require glacier-melting amounts of computing hardware, but the “large” in “large language models” doesn’t really need to be that big for there to be a functional, useful model. LLMs designed for limited hardware or consumer-grade PCs are available now as well, but [Binh] wanted something even smaller and more portable, so he put an LLM on a USB stick.

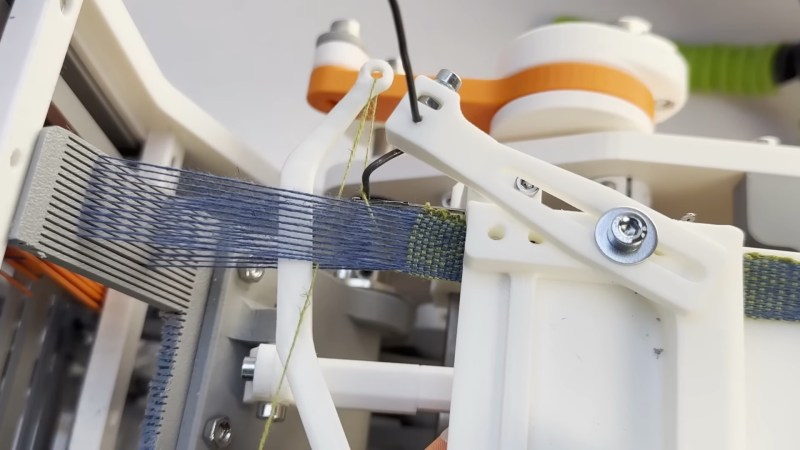

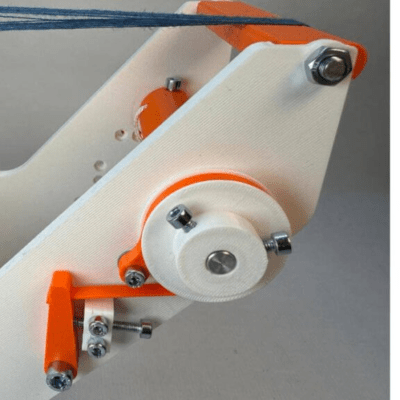

This USB stick isn’t just a jump drive with a bit of memory on it, though. Inside the custom 3D printed case is a Raspberry Pi Zero W running llama.cpp, a lightweight, high-performance version of LLaMA. Getting it on this Pi wasn’t straightforward at all, though, as the latest version of llama.cpp is meant for ARMv8 and this particular Pi was running the ARMv6 instruction set. That meant that [Binh] needed to change the source code to remove the optimizations for the more modern ARM machines, but with a week’s worth of effort spent on it he finally got the model on the older Raspberry Pi.

Getting the model to run was just one part of this project. The rest of the build was ensuring that the LLM could run on any computer without drivers and be relatively simple to use. By setting up the USB device as a composite device which presents a filesystem to the host computer, all a user has to do to interact with the LLM is to create an empty text file with a filename, and the LLM will automatically fill the file with generated text. While it’s not blindingly fast, [Binh] believes this is the first plug-and-play USB-based LLM, and we’d have to agree. It’s not the least powerful computer to ever run an LLM, though. That honor goes to this project which is able to cram one on an ESP32.