New Documentary Details Ventilator Development Efforts During COVID

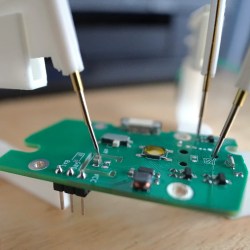

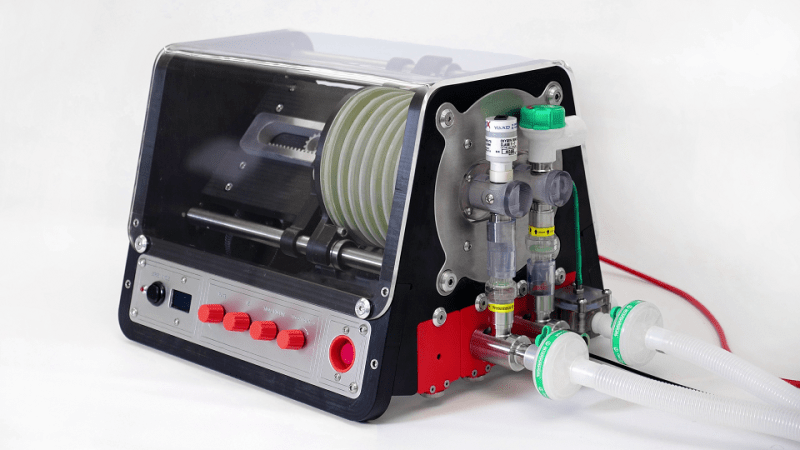

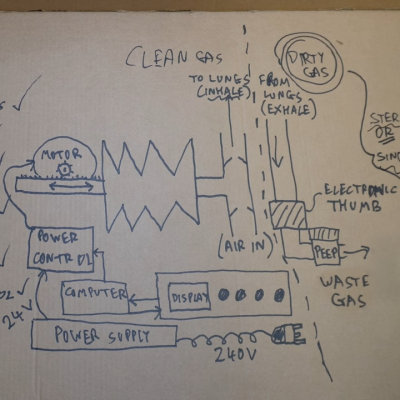

What would it be like to have to design and build a ventilator, suitable for clinical use, in ten days? One that could be built entirely from locally-sourced parts, and kept oxygen waste to a minimum? This is the challenge [John Dingley] and many others faced at the start of COVID-19 pandemic when very little was known for certain.

Back then it was not even known if a vaccine was possible, or how bad it would ultimately get. But it was known that hospitalized patients could not breathe without a ventilator, and based on projections it was possible that the UK as a whole could need as many as 30,000 ventilators within eight weeks. In this worst-case scenario the only option would be to build them locally, and towards that end groups were approached to design and build a ventilator, suitable for clinical use, in just ten days.

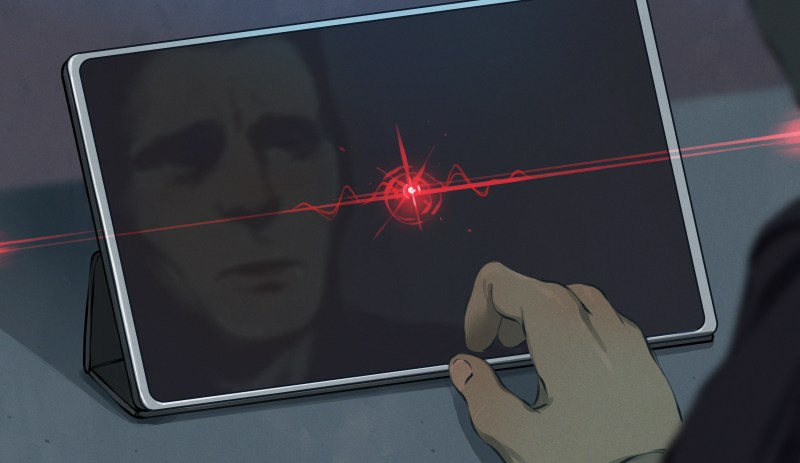

[John] decided to create a documentary called Breathe For Me: Building Ventilators for a COVID Apocalypse, not just to tell the stories of his group and others, but also as a snapshot of what things were like at that time. In short it was challenging, exhausting, occasionally frustrating, but also rewarding to be able to actually deliver a workable solution.

In the end, building tens of thousands of ventilators locally wasn’t required. But [John] felt that the whole experience was a pretty unique situation and a remarkable engineering challenge for him, his team, and many others. He decided to do what he could to document it, a task he approached with a typical hacker spirit: by watching and reading tutorials on everything from conducting and filming interviews to how to use editing software before deciding to just roll up his sleeves and go for it.

We’re very glad he did, and the effort reminds us somewhat of the book IGNITION! which aimed to record a history of technical development that would otherwise have simply disappeared from living memory.

You can watch Breathe for Me just below the page break, and there’s additional information about the film if you’d like to know a bit more. And if you are thinking the name [John Dingley] sounds familiar, that’s probably because we have featured his work — mainly on self-balancing personal electric vehicles — quite a few times in the past.