Swapping Batteries Has Never Looked This Cool

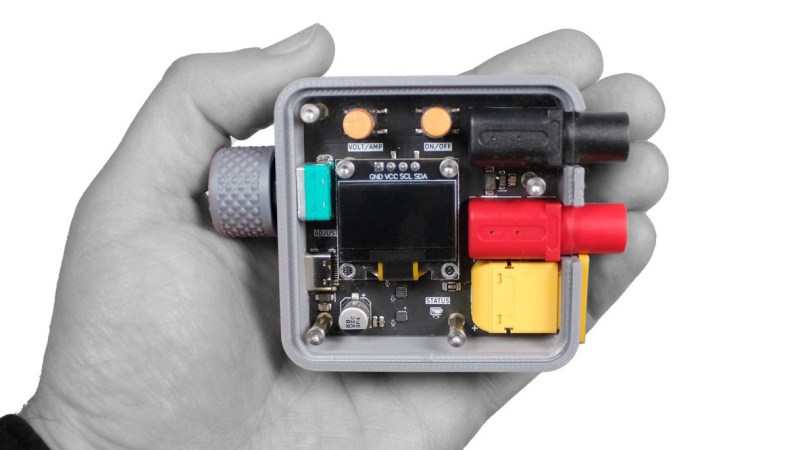

We don’t know much more than what we see with [Kounotori_DIY]’s battery loader design (video embedded below) but it just looks so cool we had to share. Watch it in action, it’ll explain itself.

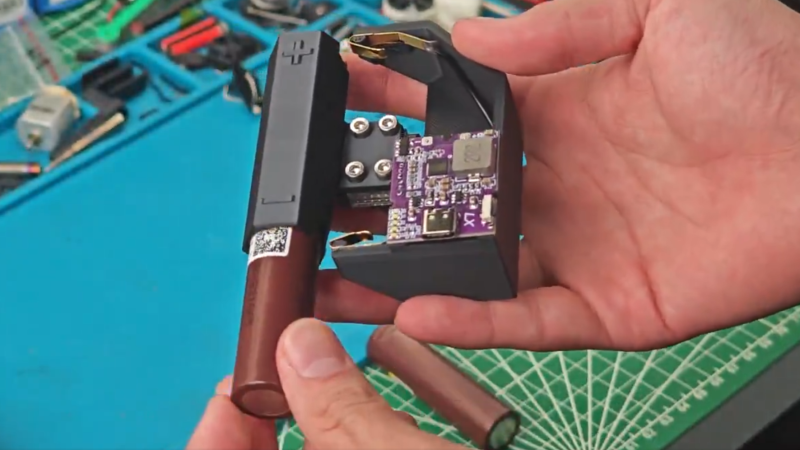

[Kounotori_DIY] uses a small plastic linear guide as an interface for an 18650 battery holder and as you can see, it’s pretty slick. A little cylindrical container slides out of the assembly, allowing a spent cell to drop out. Loading a freshly charged cell consists of just popping a new one into the cylinder, then snapping it closed. The electrical connection is made by two springy metal tabs on either end that fit into guides in the cylindrical holder.

It’s just a prototype right now, and [Kounotori_DIY] admits that the assembly is still a bit big and there’s no solid retention — a good bump will pop the battery out — but we think this is onto something. We can’t help but imagine how swapping batteries in such style with a nice solid click would go very nicely on a cyberdeck build.

It’s not every day that someone tries to re-imagine a battery holder, let alone with such style. Any ideas how it could be improved? Have your own ideas about reimagining how batteries are handled? Let us know in the comments!